Artificial Intelligence (AI) has rapidly evolved from a niche academic pursuit into a transformative force touching nearly every aspect of modern life. In recent years, breakthroughs in machine learning algorithms, the availability of big data, and powerful computing hardware have driven unprecedented AI capabilities. AI systems today can perform certain tasks at or above human level, such as image recognition and language understanding (weforum.org), while still struggling with others like commonsense reasoning. Governments, industry leaders, and the public are increasingly aware of AI’s profound implications – a 2023 survey showed 66% of people expect AI to significantly affect their lives within 3-5 years, and over half report feeling nervous about its growing influence (weforum.org). This extensive whitepaper examines the state-of-the-art in AI from multiple perspectives – technological advances, scientific applications, policy and ethics – and analyzes the wide-ranging impacts of AI on humans, animals, nature, and climate. It also explores future scenarios from optimistic to cautionary, backed by peer-reviewed research and expert reports.

Technological Perspective: AI Advancements and Key Players

Machine Learning and Deep Learning

Contemporary AI is dominated by machine learning techniques, especially deep learning neural networks. Deep learning has enabled striking advances in fields like computer vision and natural language processing. For example, modern image classifiers can now exceed human accuracy on standard benchmarks (weforum.org). Likewise, large language models comprehend and generate text with human-like proficiency in many cases. These advances are driven by ever-growing model sizes and training data – OpenAI’s GPT-3 model contains 175 billion parameters, over 100× more than its predecessor GPT-2 (technologyreview.com), demonstrating how scaling up neural networks has dramatically improved performance. However, AI still falls short on tasks requiring complex reasoning or true commonsense understanding (weforum.org), highlighting that human-level cognition in machines remains an open challenge.

Generative Models and New Frontiers

One of the most headline-grabbing developments is generative AI. Models like GPT-3, GPT-4, and Google’s PaLM in language, or DALL·E and Stable Diffusion in image generation, can create fluent text, images, and even video from prompts. These generative models are powered by deep neural networks trained on massive datasets, and they exhibit creativity within the bounds of their training. The rapid deployment of ChatGPT in late 2022 brought generative AI to millions of users, showcasing both its utility and the need for careful oversight (weforum.org). The surge of investment reflects this trend – in 2023, private funding for generative AI startups reached $25.2 billion, about 9× the amount in 2022 (weforum.org). Cutting-edge AI models are increasingly expensive to develop; training OpenAI’s latest GPT-4 was estimated to cost $75 million+, accessible only to well-resourced actors (weforum.org). These developments indicate that progress in AI is accelerating, but concentrated among those with data, talent, and computational power.

Robotics and Autonomous Systems

AI-driven robotics has also made significant strides. Advanced robots are now capable of navigating complex environments, learning motor skills, and performing tasks that previously required human labor. In manufacturing, the global adoption of industrial robots hit a record high – over 550,000 new robots were installed in factories worldwide in 2022, a 5% increase from the previous year (esirobotics.com). These robots are becoming smarter and more adaptable due to AI. Humanoid robots like Boston Dynamics’ Atlas can execute agile movements (even parkour routines) that were unimaginable a decade ago, illustrating the leaps in robotic control systems. Autonomous vehicles are another frontier: self-driving car programs (e.g. Waymo, Cruise) have logged millions of driverless miles on public roads, using AI to perceive and make decisions in real time. While full autonomy at scale is still under development, these examples show how AI algorithms enable machines to perform physical-world tasks with increasing reliability.

Key Players and Institutions in AI Research

The AI research ecosystem spans big tech companies, elite universities, and startups, but recent years have seen industry take the lead in driving frontier progress. Until 2014, most cutting-edge machine learning models came from academia; by 2023, industry produced the majority (51 out of 66 notable models), far outpacing academia’s output (weforum.org). Tech giants such as Google (and its AI research arms Google Brain and DeepMind), Microsoft (which partners with OpenAI), Meta (Facebook AI Research), Amazon, and Apple invest heavily in AI breakthroughs. These corporations command vast datasets, computing infrastructure, and expert talent, giving them an edge in building state-of-the-art systems. Academic institutions (MIT, Stanford, Carnegie Mellon, Oxford, Tsinghua, and others) remain critical for foundational research and training new scientists, often in collaboration with industry.

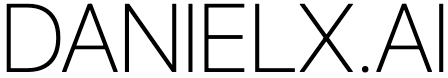

On the geopolitical stage, the United States is currently the leading source of top-tier AI models – 61 significant models originated from US-based teams in 2023, compared to 15 from China and smaller numbers from Europe (weforum.org).

Scientific Perspective: AI in Medicine, Biology, and Environmental Science

AI’s powerful pattern-recognition and predictive capabilities are being harnessed across scientific domains to accelerate discovery and solve complex problems. Researchers in medicine, biology, environmental science and beyond have integrated AI tools – often with groundbreaking results.

Medicine and Healthcare

In healthcare, AI is used to improve diagnostics, personalize treatments, and streamline clinical workflows. Medical imaging analysis has seen especially robust progress: deep learning models can detect diseases from X-rays, CT scans, or MRIs with accuracy comparable to expert physicians. For instance, in breast cancer screening, an AI system assisting a radiologist was able to detect cancers at a 4% higher rate than the standard practice of two radiologists double-reading mammograms (managedhealthcareexecutive.com). Multiple studies have found that AI diagnostic accuracy in mammography and dermatology can match or exceed human specialists (managedhealthcareexecutive.com, managedhealthcareexecutive.com), promising earlier and more reliable detection of cancers. AI is also accelerating drug discovery. A notable case was the discovery of a new antibiotic compound named halicin by an MIT machine-learning model. By screening over 100 million molecules, the AI identified halicin, which in lab tests killed several strains of bacteria resistant to all known antibiotics (news.mit.edu). This was one of the first examples of AI discovering a novel therapeutic molecule (news.mit.edu), illustrating how AI can sift through chemical space far faster than traditional methods. Additionally, AI-driven predictive models in hospitals can analyze electronic health records to forecast patient outcomes – for example, predicting which patients are at risk of complications like sepsis, and enabling preventative care. While challenges remain in validating algorithms and avoiding biases in clinical settings, AI is poised to augment doctors and researchers, leading to more precise and proactive medicine.

Biology and Biotechnology

AI has become an indispensable tool in biology, where it’s helping unravel the complexity of living systems. A landmark achievement came from DeepMind’s AlphaFold system, which solved the 50-year grand challenge of protein folding. AlphaFold’s deep learning model can predict 3D protein structures from amino acid sequences with atomic-level accuracy. In 2022, researchers released over 200 million protein structure predictions – essentially all proteins known to science – using AlphaFold (nature.com). This achievement provides biologists with structural insights into almost any protein of interest, accelerating research in drug design, enzymology, and molecular biology. Scientists now have a database of predicted protein shapes for most organisms on Earth (nature.com), enabling breakthroughs such as designing new enzymes and understanding diseases at the molecular level. Beyond proteins, AI is applied in genomics to identify gene-disease links and in synthetic biology to optimize gene circuits. Robotic labs guided by AI, often called “robot scientists,” can plan and run experiments autonomously. For example, Synbot, an AI-driven robotic chemist, can design and synthesize organic molecules with minimal human intervention (weforum.org). Such systems use reinforcement learning to decide which experiments to conduct, speeding up the discovery of new materials or drugs. AI models are also aiding neuroscientists in mapping brain activity patterns and helping ecologists decipher complex ecosystem dynamics. Across the life sciences, AI acts as a catalyzing force – analyzing vast datasets (genomic sequences, bioimages, experimental data) to find patterns and hypotheses that humans might miss, and suggesting optimal experimental directions.

Environmental Science and Climate Research

In environmental and earth sciences, AI is proving instrumental in monitoring the planet and fighting climate change. Climate modeling is benefitting from machine learning techniques that complement traditional physics-based simulations. AI can analyze decades of climate data to improve predictions of extreme weather events or to downscale coarse climate model outputs into detailed local forecasts. For instance, researchers have developed deep learning models that accurately forecast precipitation and hurricanes hours in advance, enabling better disaster preparedness. AI is also optimizing renewable energy management – electricity grids use AI to predict power demand and supply (from wind and solar) in real time, making renewable integration more efficient. Google notably applied a DeepMind AI to its data center cooling and achieved a 40% reduction in cooling energy usage, improving overall energy efficiency (deepmind.google, deepmind.google). This kind of smart optimization can be extended to factories, buildings, and city infrastructure to cut waste and emissions.

Environmental monitoring has been revolutionized by AI’s ability to analyze imagery and sensor data. Satellite and drone images processed with deep learning can detect deforestation, habitat loss, or illegal mining activities far faster than human observers. For example, conservationists use AI models in projects like Project Guacamaya in the Amazon to analyze daily satellite images and identify deforestation patterns in near real-time (news.microsoft.com). By automating the detection of subtle changes in forest cover, these AI systems alert authorities to illegal logging or land clearing much sooner, allowing quicker intervention. Similarly, AI-powered acoustic sensors (“bioacoustics”) are deployed in rainforests and oceans to monitor biodiversity by listening for animal calls or environmental sounds (news.microsoft.com). Patterns in these vast audio streams, deciphered by AI, can indicate ecosystem health or the presence of threats like logging. In agriculture, AI helps analyze soil data and crop images to guide precision farming, reducing fertilizer and water usage. And in pollution management, machine learning models predict air quality or track pollution plumes, helping policymakers issue health warnings or enforce regulations. These scientific applications underscore AI’s role as a force-multiplier in research – not replacing human experts, but expanding their capabilities by finding signals in the noise and handling scales of data that would be otherwise unmanageable.

Case Studies and Breakthroughs

- AlphaFold for Protein Folding: DeepMind’s AlphaFold delivered a breakthrough by predicting the structures of ~200 million proteins, nearly all known proteins (nature.com). This opens new avenues in drug discovery and our understanding of fundamental biology, as researchers can study protein functions and interactions with unprecedented ease.

- Halicin – AI-Discovered Antibiotic: An MIT research team trained a deep learning model to identify molecules with antibacterial properties. The AI screened over 100 million compounds and discovered halicin, a novel antibiotic effective against drug-resistant bacteria (news.mit.edu). Halicin works against pathogens where existing antibiotics fail, demonstrating AI’s potential to tackle the antibiotic resistance crisis.

- Climate Modeling and Weather Forecasting: Machine learning models are being used alongside traditional climate models. For instance, GraphCast (by DeepMind) and FourCastNet (by NVIDIA) are AI systems that can forecast weather patterns like precipitation or atmospheric pressure faster and with comparable accuracy to conventional methods, by learning from historical meteorological data. These tools provide faster turnaround on forecasts, useful for extreme event prediction in a warming world.

- Wildlife Conservation: In Kenya and other countries, conservationists use AI to combat poaching. The PAWS (Protection Assistant for Wildlife Security) system predicts poaching risk across wildlife reserves using past poaching data and game theory models. It then suggests optimal ranger patrol routes, outperforming traditional patrol planning (aau.edu). Field trials showed that AI-guided patrols found significantly more snares and illegal activities than unguided patrols, directly saving animal lives.

- Pandemic Response: During the COVID-19 pandemic, AI was applied to accelerate vaccine and drug research (by analyzing protein structures of the virus and screening potential compounds), to model disease spread and optimize interventions, and even to assist in diagnostics via AI-based analysis of lung scans. These efforts, while under intense time pressure, highlighted how AI can bolster our toolkit in responding to global health emergencies.

Policy and Ethical Considerations: Governance, Regulations, and Dilemmas

The swift expansion of AI capabilities has spurred active discussions on how to govern and use these technologies responsibly. Policymakers worldwide are grappling with crafting regulations that mitigate AI’s risks without stifling innovation. At the same time, ethicists and researchers are illuminating issues such as bias, privacy, and the consequences of delegating decisions to algorithms. This section examines the evolving policy landscape and key ethical challenges surrounding AI.

Government Regulations and Global AI Policies

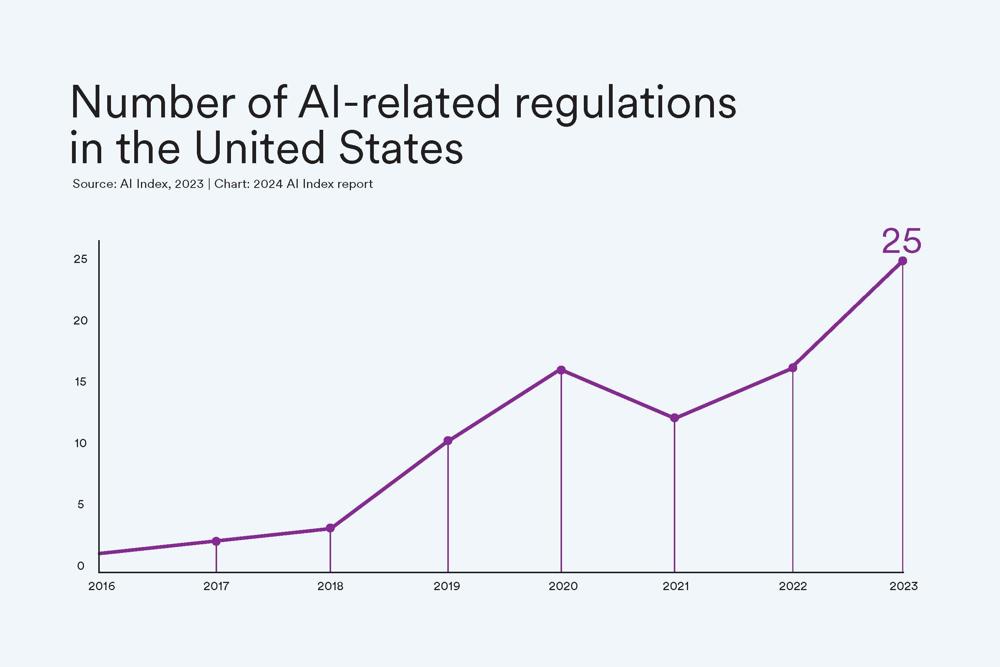

Governments have recognized that AI’s pervasive impact requires regulatory oversight. In the past few years, there has been an uptick in AI-related laws and strategies globally. In the United States, for example, the number of AI-related regulations introduced surged from practically zero in 2016 to 25 in 2023 (weforum.org).

Regarding AI-related laws in the US, data from 2016 to 2023 shows, there is a sharp rise reflecting growing legislative attention to AI in areas like data protection, transparency, and algorithmic accountability.

he European Union is taking a particularly proactive stance through its comprehensive AI Act, poised to be one of the world’s first broad AI regulatory frameworks. The EU AI Act adopts a risk-based approach: it bans certain high-risk AI uses outright (such as social scoring systems that rank citizens, or real-time biometric surveillance in public spaces), and imposes strict obligations (on safety, fairness, and transparency) for “high-risk” AI systems like those in healthcare or transportation. Less risky AI applications face lighter requirements. The Act explicitly prohibits AI methods that manipulate human behavior to cause harm or that exploit vulnerable groups (digital-strategy.ec.europa.eu). Once in force (expected 2024–2025), the EU’s rules may set de facto global standards, given the EU’s market size.

Other countries are crafting policies as well. China has invested massively in AI for economic and military purposes but also introduced rules, for instance, to curb deepfake abuses and regulate recommendation algorithms (demanding transparency and the ability to turn off personalization). In 2023, China’s regulators implemented guidelines requiring watermarking of AI-generated media and holding companies accountable for content from generative models. The UK has taken a light-touch approach so far, promoting innovation sandboxes and issuing guidance rather than hard laws, though it hosted a global AI Safety Summit in 2023. The United States, while lacking a unified federal AI law, relies on sector-specific regulations (e.g., FDA oversight for medical AI tools) and principles (the White House’s 2022 “AI Bill of Rights” blueprint outlines desired protections like algorithmic discrimination safeguards and user notification for AI interactions). Internationally, organizations are pushing for coordination. The OECD’s AI Principles (2019) were endorsed by 42 countries including the US, EU, and G20 members, marking the first intergovernmental consensus on AI values like fairness, transparency, and human-centeredness (epic.org). UNESCO released its own AI Ethics Recommendation in 2021, emphasizing similar themes and adding calls for environmental sustainability in AI development. Additionally, groups like the Global Partnership on AI (GPAI) facilitate collaboration on responsible AI among governments and researchers. An example of global concern is lethal autonomous weapons (LAWs): the United Nations has convened talks on banning or restricting “killer robots,” with the UN Secretary-General calling such weapons “morally repugnant” and urging a ban (disarmament.unoda.org). While no treaty exists yet, over 60 countries support at least negotiating a new legal instrument on LAWs (hrw.org). In December 2023, 152 UN member states voted for a resolution raising concern about autonomous weapons and the need for human control (hrw.org), signaling broad agreement that some AI applications cross ethical red lines.

Ethical Dilemmas: Bias, Privacy, and Transparency

As AI systems permeate high-stakes decisions, ethical concerns have come to the forefront. One major issue is algorithmic bias. AI models trained on historical or web data can inadvertently learn societal biases related to race, gender, or other characteristics, leading to discriminatory outcomes. Studies have shown instances of bias, such as facial recognition systems having higher error rates on darker-skinned and female faces (due to underrepresentation in training data) or hiring algorithms downgrading resumes with female indicators due to learning from past biased hiring (gpai.ai, gpai.ai). These biases can reinforce inequities if unchecked. Policymakers and companies are thus focusing on “AI fairness” – for example, the EU AI Act will require high-risk AI systems to undergo assessments for bias. Techniques for explainability are also in demand, since many AI models (especially deep neural networks) are “black boxes” that don’t easily reveal why they made a given decision. This opaqueness complicates accountability – if an AI system denies someone a loan or an insurance claim, it’s often unclear which factors led to that outcome. To address this, researchers are developing explainable AI methods, and regulations (like proposed U.S. rules on AI in hiring) increasingly call for explanation or auditability of automated decisions.

Data privacy is another critical concern. AI systems often require huge amounts of data, including personal information, for training. This raises questions about how that data is collected, stored, and used. Regulations like Europe’s GDPR give users rights over personal data and impose strict conditions on processing, which affects AI trained on user data (e.g. requiring anonymization or consent). There have been instances of AI models memorizing sensitive information from training data, underscoring the importance of privacy-preserving techniques. Moreover, the use of AI for surveillance – from facial recognition cameras in public spaces to algorithms monitoring online activity – has civil liberties implications. In some authoritarian contexts, AI-powered surveillance has been used to track and profile citizens (as seen with certain implementations of social credit scoring or real-time facial recognition). Even in democracies, law enforcement use of AI (predictive policing algorithms, for instance) has prompted debates about oversight and potential discrimination. Policymakers are striving to balance the benefits of AI in security with protecting individual rights.

Another ethical dilemma is autonomous decision-making in life-and-death situations. For example, self-driving cars may confront “trolley problem” scenarios – if a crash is inevitable, how should the AI weigh potential harm to passengers versus pedestrians? Pre-programming moral choices is exceedingly difficult and controversial. Manufacturers have tended to focus on minimizing accident probability overall, but as autonomy becomes mainstream, societies may need consensus on such value trade-offs. In warfare, autonomous drones or weapons that independently select targets pose ethical and legal challenges: Can a machine reliably adhere to international humanitarian law? Who is responsible if it commits an error? These questions have led to calls for ensuring meaningful human control over any lethal force decisions made by AI.

AI Governance and Ethics Initiatives

In response to these concerns, a range of AI ethics frameworks and oversight bodies have emerged. Tech companies now commonly have internal AI ethics teams to review sensitive applications. For instance, Google established AI Principles in 2018 (pledging not to develop AI for weapons or surveillance that violates norms) and created review processes for new projects. Facebook, Microsoft, and others have similar guidelines and sometimes external ethics boards. However, some high-profile controversies – like the firing of AI ethics researchers who raised bias concerns – show the tension between ethics and business pressures. Independent organizations (e.g., the AI Now Institute, OpenAI’s Office of the Ombudsperson, and the Partnership on AI) are working to audit algorithms and advise on best practices. There is also a movement toward algorithmic transparency, advocating that people have the right to know when AI is involved in decisions and to understand the system’s logic. Some jurisdictions (New York City, for example) have begun requiring audits of AI hiring tools for bias, and the EU’s draft AI Act would mandate disclosure when users are interacting with AI (such as chatbots or deepfake media). The goal of these efforts is to ensure AI systems are trustworthy – meaning they are fair, explainable, secure, and accountable. Achieving this will likely require ongoing collaboration between regulators, the tech industry, academia, and civil society, as AI technology and its societal context continue to evolve.

Impact on Humans: Workforce, Education, Social Fabric, and Well-being

AI’s growing capabilities are transforming human societies in multifaceted ways. From the nature of jobs and work, to how we learn, to our mental health and social interactions, AI-driven tools bring both promise and disruption. This section explores the benefits AI offers to human life as well as the risks and challenges it poses.

Workforce and the Future of Work

Perhaps the most immediate impact of AI is being felt in the workforce and economy. AI automation – including robotics and intelligent software – is taking over routine, repetitive tasks across industries. This boosts productivity and can free workers from drudgery, but it also disrupts traditional employment. According to the World Economic Forum, by 2025 AI and automation could displace about 85 million jobs worldwide, yet simultaneously create an estimated 97 million new roles in emerging industries and the AI economy (shrm.org). In other words, while certain jobs vanish, new jobs (like data analysts, AI engineers, and robotics technicians, as well as roles in previously unimagined sectors) are expected to appear, potentially offsetting losses. Historical examples show technology tends to create jobs in the long run, but the transition can be difficult for workers whose skills become obsolete. Job polarization is a concern: AI is particularly adept at automating routine cognitive tasks (such as data entry or basic accounting) and routine manual labor (assembly line work), which may squeeze out many middle-skill roles. Jobs that require creativity, complex problem-solving, or social intelligence (e.g. teachers, doctors, artists) are relatively safer from automation in the near term. However, even these fields will be transformed as AI becomes a collaborative tool – for instance, AI assistants might handle administrative tasks or preliminary analysis, allowing professionals to focus on higher-level work.

Overall, AI augments human productivity. Studies have found that when AI tools are integrated thoughtfully, workers can accomplish more in less time with higher quality (weforum.org). For example, recent field experiments with customer support agents showed that those given access to an AI chatbot for suggestions handled queries 14% faster on average, and less experienced agents improved the most by learning from AI-suggested responses (weforum.org). AI can also help bridge skill gaps by providing on-the-job guidance. However, if the benefits of AI productivity accrue mainly to company owners or highly skilled tech workers, inequality could worsen. This is why re-skilling and up-skilling programs are crucial – governments and businesses are investing in training workers for the “AI economy,” teaching skills complementary to AI (such as complex problem-solving, interpersonal skills, or AI maintenance). Some economists propose policies like universal basic income or negative income taxes as safety nets if automation outpaces job creation in certain regions or sectors. In summary, AI is reshaping the future of work: many jobs will change rather than disappear, as humans work alongside AI systems. The net impact on employment and economic well-being will depend on how societies manage this transition, distributing AI’s productivity gains and helping workers adapt to new roles.

Education and Learning

AI is also influencing education, changing how we learn and what we need to learn. On one hand, AI offers personalized and accessible learning experiences. Intelligent tutoring systems can adapt to a student’s level, providing tailored exercises and feedback – effectively one-on-one tutoring at scale. For example, AI tutors in subjects like math have been shown to improve student performance by identifying individual weaknesses and adjusting the pace accordingly. Language-learning apps use AI to converse with learners and correct their grammar. Moreover, generative AI can help create educational content, like practice problems or even interactive simulations, reducing the workload on teachers. These tools have potential to democratize education by bringing quality instruction to remote or underserved areas (as long as internet access is available). Students can learn at their own pace with an AI assistant always available, which can be especially helpful for those who need extra support or, conversely, more challenging material.

On the other hand, the rise of AI raises questions about the skills students should acquire. As AI handles more routine analytical tasks, there may be greater emphasis on creativity, critical thinking, and social-emotional skills in curricula. Digital literacy and understanding AI itself are becoming important – some schools have started teaching basic AI concepts so that the next generation can better work with (and oversee) AI systems. The availability of tools like ChatGPT has sparked debate in academia regarding cheating and the value of traditional assignments. If an AI can write passable essays or code, educators need to craft assessments that encourage original thought or test deeper understanding, possibly through oral exams or project-based learning. There are also concerns about equity: if affluent students have access to advanced AI tutors or devices and others do not, it could widen achievement gaps. Data privacy is relevant as well, since educational AI platforms may gather sensitive data on student learning patterns. Policymakers are considering guidelines for AI in schools to ensure transparency and data protection.

In higher education and job training, AI is used to match students to personalized career paths or to simulate job scenarios (for example, nursing students training with AI-driven virtual patients). Lifelong learning will be essential in an AI-driven economy; people may frequently update their skills through online courses and AI coaching systems. The overarching impact of AI on education is likely positive if leveraged properly – improved learning outcomes and efficiency – but it requires rethinking pedagogies and maintaining human oversight so that education remains holistic, fostering attributes that complement AI rather than compete with it.

Mental Health and Well-being

AI’s influence on mental health is complex, featuring promising therapeutic tools as well as potential harms. On the positive side, AI-powered chatbots are being developed to provide cognitive behavioral therapy (CBT) and mental health coaching. These chatbots (like Woebot or Wysa) use natural language processing to converse with individuals, guiding them through exercises to manage anxiety or depression. A meta-analysis of studies found AI-based chatbot interventions showed a “promising role” in alleviating depressive and anxiety symptoms (pubmed.ncbi.nlm.nih.gov), particularly as an accessible first line of support. Such bots are available 24/7 and can help overcome barriers like stigma or shortage of human therapists. For example, during the pandemic, many people turned to mental health apps and found relief in chatting with an empathetic AI when human help was scarce. AI is also employed in analyzing social media posts or smartphone data (like typing speed, sleep patterns) to passively detect signs of mental distress, potentially alerting caregivers before a crisis. These applications could enable more proactive mental healthcare and suicide prevention.

However, there are significant caveats. An AI chatbot lacks true understanding and may not respond appropriately to severe situations, so it must be used with caution and clear disclaimers (and ideally, escalation to human care when needed). Over-reliance on chatbot “therapy” without professional oversight might lead to missed diagnoses. Additionally, people can form emotional attachments to human-like AI companions – apps like Replika offer “AI friends” – which some find comforting, but others see as potentially exacerbating loneliness or providing a false sense of relationship. Ensuring these systems are safe and do not provide harmful advice is an active area of quality control (for instance, GPT-4 and similar models are now being tested as mental health assistants, but concerns remain about consistency and liability).

On the flip side, AI-driven algorithms in social media have been implicated in mental health challenges. Recommendation systems that maximize engagement can inadvertently promote content that makes users anxious, angry, or depressed – because such content sometimes drives more clicks. Endless algorithm-curated feeds might contribute to social comparison and cyberbullying, impacting youth mental health. There is evidence linking heavy social media use (as shaped by AI algorithms) with increased rates of anxiety and depression, though causation is debated. What is clearer is that filter bubbles and personalized content streams can amplify feelings of isolation or distorted reality. For example, if someone with body image issues is algorithmically fed a stream of idealized physiques, it can worsen their self-esteem. These are not issues inherent to AI, but rather how AI is optimized in platforms for objectives like ad revenue. Some companies are now introducing features to reduce doom-scrolling or to inject more uplifting content as a response to such critiques.

In summary, AI offers new modalities for supporting mental health – making care more accessible and personalized – but also poses challenges through the unintended effects of algorithms on human well-being. Achieving net positive outcomes will require ethical design (aligning AI systems with human wellness rather than just engagement) and hybrid approaches that keep human judgment in the loop for mental healthcare.

Social Structures and Relationships

AI is subtly but powerfully reshaping social structures and interactions. On a societal scale, AI-driven systems influence public discourse and even democratic processes. Social media algorithms determine which news or posts people see, thereby affecting opinions and potentially polarizing communities. Research indicates that maximizing engagement via these algorithms can lead to amplification of divisive or extremist content (brookings.edu). As one Brookings report summarized, “Maximizing online engagement leads to increased polarization. The fundamental design of platform algorithms helps explain why they amplify divisive content.” (brookings.edu) Echo chambers can form when AI learns an individual’s preferences and feeds them more of the same perspective, reinforcing biases and diminishing shared understanding. This dynamic was seen in events like election misinformation campaigns, where AI-powered recommendation engines inadvertently promoted conspiracy theories to certain user segments, contributing to real-world conflicts. In response, there have been calls for algorithmic transparency and for platforms to tweak AI to prioritize content from diverse viewpoints or verified information. Some platforms now allow users to see content chronologically or disable personalized ranking to mitigate this issue.

Deepfakes – hyper-realistic AI-generated fake videos or audio – represent another social challenge. Deepfakes can undermine trust in media, as people become unsure if a video of a public figure is real or fabricated. They have been used maliciously to create non-consensual pornography or to impersonate voices for fraud. The existence of such technology means society may require new norms (e.g., digital signatures for authentic media) to preserve a shared reality. Positively, the same tech can be used for harmless entertainment or for dubbing movies convincingly, but vigilance is needed so that deepfakes don’t erode social trust or become weapons of disinformation.

On interpersonal levels, AI is mediating more of our relationships. Recommender systems decide which friends’ posts we see, dating app algorithms suggest potential partners, and AI assistants (like Siri or Alexa) are becoming quasi-members of the household. Some people interact with voice assistants or chatbot “friends” extensively, which raises novel questions: Can AI fulfill social needs? What happens when AI intermediates communication between people (for instance, auto-completion might script our emails or messages)? There are even instances of AI being used in hiring or legal decisions (like algorithms screening resumes or setting bail terms), which affects individuals’ life chances and trust in institutional fairness. Ensuring these systems are unbiased and transparent is crucial for social cohesion.

Despite concerns, AI also provides tools to strengthen communities – for example, translation algorithms break language barriers, enabling cross-cultural communication at scale. Social robots are being used in elder care or as companions for autistic children, often yielding positive results in engagement and emotional comfort. And during crises (natural disasters, pandemics), AI helps coordinate humanitarian response and information dissemination, which can bring communities together. The social impact of AI thus has dual faces: it can connect or divide, empower or marginalize. The outcome depends on how we govern the deployment of AI in social spheres, aligning it with human values like inclusion, respect, and authenticity.

Impact on Animals: Wildlife, Biodiversity, and Animal Welfare

Beyond human society, AI is also affecting the animal kingdom and our natural ecosystems. From conservation efforts that employ AI to protect endangered species, to farming and veterinary applications, to indirect impacts on habitats, AI’s reach extends to non-human life. This section examines how AI is helping (and in some cases potentially harming) animals and biodiversity.

Wildlife Conservation and Monitoring

Conservationists are increasingly leveraging AI to better understand and protect wildlife populations. One significant application is in wildlife monitoring through camera traps and acoustic sensors. Networks of camera traps in forests and reserves capture millions of images of passing animals. Traditionally, researchers spent countless hours manually identifying species in these photos. Now, AI image recognition models can automate this process with high accuracy, identifying species – and even individual animals – from photographs. For example, the Wildlife Insights platform uses AI to process camera trap images, drastically cutting analysis time and providing near-real-time data on biodiversity (wildlifeinsights.org). Similarly, scientists have developed AI systems capable of recognizing individual faces or coat patterns of animals (like tigers’ stripes, whales’ flukes, or dolphin dorsal fins). A 2023 study demonstrated an AI tool that reliably recognizes individual dolphins and whales across 24 species (mmrphawaii.org), a task that previously required expert scientists. Identifying individuals allows for better tracking of animal movements and population sizes, informing conservation strategies (e.g., estimating how many snow leopards live in a region, or whether a particular elephant herd is recovering).

Another powerful use of AI is in predicting and preventing poaching and illegal wildlife trade. As mentioned, systems like PAWS combine machine learning with game theory to predict poaching hotspots and optimal patrol routes for rangers (aau.edu). By analyzing past poaching incident data (locations of snares, patrol frequencies, terrain, etc.), the AI can identify patterns and suggest where limited ranger resources should be focused on any given day for maximum impact. This data-driven approach has significantly increased poacher arrests and snare confiscations in field tests, enhancing the protection of animals like elephants, rhinos, and big cats that are often targeted (aau.edu). Drones equipped with AI-based thermal imaging also patrol parks at night to catch poachers, with some systems able to distinguish human intruders from animals automatically.

AI-assisted habitat monitoring extends to detecting environmental changes that affect animals. For instance, AI analyzes satellite data to detect deforestation (as discussed in the scientific perspective) – this helps conservationists intervene when a forest habitat is being illegally cleared. It can also classify land use and identify pristine habitats in need of protection. Bioacoustic AI models pick up signals like gunshots or chainsaws in audio recordings of protected areas, triggering rapid response to human encroachment. In marine conservation, AI algorithms using underwater microphones can monitor whale and dolphin calls to map their presence and stress levels (ship noise and sonar can disturb them), enabling the creation of quieter shipping routes or temporary halts to naval exercises. Overall, AI has become a force multiplier for conservation: doing the grunt work of data analysis, spotting threats early, and guiding human action to where it’s most needed, thereby improving outcomes for wildlife.

Veterinary Medicine and Animal Care

AI is making inroads in veterinary medicine and animal husbandry as well. Similar to human healthcare, diagnostic imaging for animals (pets or livestock) can benefit from AI. There are AI tools trained to read veterinary X-rays or ultrasound images to detect conditions in cats, dogs, and horses. For example, an AI model might assist in spotting hip dysplasia in dog radiographs or identifying tumors in pets at an earlier stage. Since many vets do not have sub-specialties (unlike human medicine, where a radiologist would focus only on imaging), having a second pair of “AI eyes” can be very helpful in general practice. AI systems are also used on farms to monitor livestock health. Computer vision cameras in barns can track the movement and feeding behavior of cows or pigs – if a cow is moving less or not eating, it might be an early sign of illness, and the AI can alert farmers to inspect that animal. Companies have developed facial recognition for cattle that, combined with temperature sensors and other data, help identify sick animals quickly to prevent disease spread in herds.

Moreover, AI is contributing to animal behavior research. Biologists studying animal communication are using machine learning to decode patterns in animal sounds. There have been attempts to use AI to interpret the songs of whales or the dances of bees; for instance, Project CETI (Cetacean Translation Initiative) employs AI to analyze hundreds of thousands of sperm whale clicks to see if we can understand their language patterns. Early results have clustered whale sounds into categories that might correspond to specific contexts or meanings, a first step toward a potential “Rosetta Stone” for animal communication. In primatology, AI-driven analysis of hours of video footage can catalog behaviors of chimpanzees or other primates in the wild more systematically than a human observer could, yielding insights into social structures and well-being.

For companion animals, AI-powered apps can help pet owners. There are apps where you can take a photo of your pet and an AI will identify possible health issues (like skin conditions on a dog). Pet wearables with AI analyze activity and vitals, analogous to human fitness trackers, to keep pets healthy. However, the uptake of AI in daily veterinary practice is still emerging, with the need for vet professionals to trust and validate these tools. Nonetheless, as algorithms get more robust and vetted by research, we can expect more routine use – for example, automated estrus detection in dairy cows (important for breeding timing) or AI-guided feeding systems that adjust feed mix per animal to optimize nutrition and reduce waste.

Risks to Biodiversity and Habitat

While AI provides many tools for protecting nature, it also poses some risks to animals and ecosystems, often indirectly through its influence on human activity. One concern is that AI could accelerate resource extraction and habitat destruction if misapplied. For instance, AI-driven optimization in industries like agriculture or forestry might increase yield or efficiency in the short term but at the cost of wildlife. An AI system might recommend the most efficient way to harvest timber, which if not checked by sustainability considerations, could lead to over-exploitation. AI algorithms focused solely on profit could ignore environmental externalities – essentially entrenching unsustainable business models (gpai.ai). As one report notes, if AI is used to “optimize and entrench unsustainable business models or to drive e-commerce consumerism, thereby increasing resource demand,” it can indirectly harm biodiversity (gpai.ai). For example, AI-enabled logistic systems make same-day deliveries ultra-efficient, encouraging more consumption and packaging waste. More consumption drives more production and extraction of raw materials from natural habitats.

Another risk is wildlife disturbances by autonomous machines. As drones and robots become common, they could stress animals if not managed – e.g., drones flying near bird nesting sites can cause panic and nest abandonment. If autonomous vehicles proliferate in wilderness areas (for monitoring or tourism), they might disrupt animal movement patterns (though they could also be programmed to avoid animals if designed responsibly). There’s also the scenario of autonomous weapons in conflict zones potentially harming wildlife; land mines have injured animals historically, and one could imagine an autonomous combat drone not distinguishing an animal from a human in a conflict area.

The environmental footprint of AI infrastructure itself can impact nature. Large data centers (which power cloud computing and AI services) require significant land and water for cooling. While tech companies strive to use renewable energy, some data centers are built in sensitive areas or draw water from stressed watersheds for cooling, which can affect local ecosystems. Furthermore, the hardware supply chain for AI – mining of rare earth elements and metals for semiconductors and batteries – often has direct environmental impacts including deforestation, soil and water pollution, and wildlife displacement near mines.

Finally, if AI contributes to climate change via high energy consumption, that poses a fundamental threat to biodiversity. Many species are already struggling to adapt to rapid climate shifts; any additional AI-related emissions add to this pressure (though, as discussed earlier, AI is also being used to mitigate climate change). The key is ensuring AI’s net effect on nature is positive by steering its use towards conservation and sustainability, and explicitly accounting for biodiversity in AI deployment decisions. This has been recognized in principles like “AI for Good” – emphasizing that AI should not just optimize for human benefit in the short term, but for planetary health long term.

Impact on Nature and Climate: AI for Sustainability vs Environmental Costs

AI’s relationship with the natural environment is double-edged. It holds significant promise in helping address climate change and promoting sustainability, yet it also contributes to environmental degradation through its resource demands. In this section, we explore how AI is being applied to tackle environmental challenges, and we weigh the positive contributions against the environmental costs of AI itself.

AI in Climate Change Mitigation and Adaptation

As the world faces the climate crisis, AI has emerged as a valuable ally in both mitigation (reducing greenhouse gas emissions) and adaptation (preparing for climate impacts). One key area is energy efficiency and optimization. AI algorithms are used to manage electrical grids more efficiently, especially with the rise of renewable but variable energy sources like solar and wind. Machine learning can forecast energy production (predicting when the sun will shine or wind will blow) and energy demand, allowing grid operators to balance supply and demand in real time and reduce reliance on fossil fuel backup. In smart grids, AI can help route electricity optimally and manage storage systems (like batteries) to minimize waste. As noted, Google applied AI to its data centers to cut energy usage for cooling by 40% (deepmind.google) , and such techniques can be extended to HVAC systems in large buildings or even city-wide initiatives – for instance, adjusting traffic light patterns via AI to reduce vehicle idling time and emissions in congested urban areas.

AI also aids the development of clean technologies. In materials science, AI models are searching for new materials for more efficient solar panels, better batteries, or carbon capture. By digesting scientific data and suggesting promising molecular structures, AI accelerates discoveries that could yield breakthroughs in energy storage (like batteries that charge faster or hold more power) or carbon sequestration (materials that can absorb CO₂ more cheaply). In transportation, AI is integral to the operation of autonomous electric vehicles and optimizing routes for delivery trucks to cut fuel use. AI-enabled platforms for carpooling and ride-sharing can also contribute to emission reduction by reducing the number of vehicles on the road.

On the adaptation side, AI provides improved climate predictions and disaster response. Climate change is making extreme weather events more frequent and severe; AI models help forecast these events with greater accuracy and lead time. For example, by analyzing historical weather and satellite data, AI can improve early warning systems for hurricanes, floods, and wildfires. This allows communities to prepare and adapt, potentially saving lives and reducing economic damage. In agriculture, AI-driven climate models inform farmers of shifting weather patterns so they can adjust crop varieties or planting schedules. AI can even assist in climate-resilient urban planning – analyzing data to identify heat islands in a city or areas prone to flooding, so authorities can plant more trees or improve drainage where it’s most needed.

Importantly, AI is being used to monitor greenhouse gas emissions. Satellite data combined with AI can detect methane leaks from oil and gas facilities (methane is a potent greenhouse gas) or illegal flaring. Some startups use AI to verify corporate carbon emissions and offsets, increasing transparency and accountability in climate commitments. All these contributions make AI a powerful tool in humanity’s effort to combat climate change. Indeed, a comprehensive review in 2019 identified dozens of high-impact opportunities for machine learning in climate action – from energy to agriculture to geoengineering (sciencedirect.com). The caveat is that technology alone is not a silver bullet; AI needs to be part of a broader societal push including policy changes and behavioral shifts.

Sustainability and Environmental Protection

Beyond climate, AI is deployed in various sustainability and environmental protection initiatives. In agriculture, known as “smart farming” or precision agriculture, AI systems analyze sensor and drone data on soil health, moisture, and crop growth to advise farmers on optimal watering, fertilization, and pest control. This reduces the use of water, fertilizers, and pesticides – benefitting the environment by preventing runoff pollution and conserving resources, while also improving yields. AI-powered robots can do targeted weeding or harvesting, further minimizing chemical usage. There are even AI tools to advise farmers on crop rotation or cover cropping to maintain soil carbon, thus tying into climate mitigation through improved soil sequestration.

In water management, AI helps monitor water quality in rivers and oceans via autonomous sensors that detect chemical signs of pollution or algal blooms, enabling quicker cleanup responses. Machine learning models are used to predict fish populations and guide sustainable fishing quotas, helping prevent overfishing and collapse of marine ecosystems. Some port cities use AI to manage ship traffic in ways that reduce underwater noise pollution, protecting marine mammals.

Urban sustainability is another front: smart city initiatives use AI for efficient public transportation routing, dynamic lighting (dimming streetlights when no one’s around, etc.), and optimizing waste collection routes (saving fuel and ensuring trash doesn’t overflow). These incremental improvements add up to significant resource savings and lower emissions. AI is also enhancing recycling processes – computer vision can sort recyclables from trash on conveyor belts faster and more accurately than humans, improving recycling rates and reducing landfill use.

Environmental protection agencies employ AI for ecosystem monitoring. For example, AI can classify land cover from satellite images to see how wetlands or forests are changing over time, informing conservation policy. In oceans, AI-assisted analysis of satellite imagery helps detect illegal fishing vessels in marine protected areas (using pattern recognition to spot suspicious ship movements). Similarly, AI can track ships engaged in illicit activities like dumping waste. By enforcing environmental laws more effectively, AI indirectly safeguards nature.

Carbon footprint analysis is another application – corporations are beginning to use AI to analyze their supply chains and find inefficiencies or excessive emissions hotspots. This might involve analyzing logistics (to reduce travel distance) or manufacturing (to cut energy use), effectively embedding sustainability into the optimization criteria that AI handles.

In summary, AI, when directed with environmental objectives, serves as a powerful enabler for sustainability: optimizing resource use, reducing pollution, and bolstering conservation efforts. The growing field of “AI for Earth” or “AI for Good” emphasizes exactly these positive applications, and numerous projects are underway globally to harness AI for the planet’s benefit.

Environmental Costs of AI

Counterbalancing the above, we must confront the environmental costs of AI itself. Training large AI models and operating countless AI-powered devices consume significant energy and resources, contributing to pollution and carbon emissions. A landmark study in 2019 highlighted the hefty carbon footprint of deep learning research: training a single big AI model (with hyperparameter tuning) was estimated to emit over 626,000 pounds of CO₂ – roughly five times the lifetime emissions of an average car (including manufacture) (technologyreview.com). This eye-opening figure, albeit for an extreme case, underscored that deep learning has a terrible carbon footprint if done without regard for efficiency (technologyreview.com). More recent models like GPT-3 and GPT-4 required even more compute; while their exact training emissions are often not disclosed, independent analyses suggest on the order of hundreds of tons of CO₂ per model. Additionally, inference (the daily use of AI models by millions of users) adds ongoing energy costs in data centers. For example, every time someone asks a question to a large language model like ChatGPT, the data center expends GPU computations that, aggregated over billions of requests, draw substantial power.

There is also the e-waste and materials aspect. AI requires specialized hardware – GPUs, TPUs, AI accelerators – which are made of silicon, rare earth metals, gold, and other materials. The production of these components involves mining and manufacturing processes that can be environmentally damaging. Rare earth metal mining often leads to habitat destruction and toxic waste if not managed properly. And as AI hardware becomes obsolete (which can be frequent, given rapid advances), disposing of or recycling millions of chips and electronic devices becomes a challenge. Data centers themselves have a physical footprint and often a short lifespan before upgrades, contributing to construction waste.

The good news is that awareness of these costs has risen, and there is movement toward greener AI. Researchers are exploring more energy-efficient algorithms and model architectures (for instance, techniques that require less training data or computations, or novel chip designs that are more power-efficient). There is also an emphasis on using renewable energy to power data centers – many tech companies now run a large portion of their operations on wind, solar, or hydroelectric power to reduce net emissions. Some AI models are being trained in regions or at times when renewable energy is abundant (like scheduling non-urgent training for a windy night in Texas when wind farms are over-producing). Additionally, there’s interest in “ TinyML” – making AI models small and efficient enough to run on low-power devices like smartphones or microcontrollers, which reduces the need for heavy cloud computation.

Another mitigation strategy is carbon offsetting by AI firms, though offsets are not a perfect solution and depend on the integrity of offset projects. The community is also recognizing that bigger is not always better in AI – a shift to value more efficient algorithms rather than blindly pursuing more compute-heavy ones for marginal gains. The concept of AI sustainability now features in many AI conferences and corporate responsibility reports.

In conclusion, while AI can be a green enabler in many domains, the AI sector must clean up its own environmental act. With conscientious effort – improving efficiency, using green energy, and recycling hardware – the net balance can be tipped such that AI’s contributions to fighting climate change and protecting nature far outweigh its footprint. Achieving this balance is an ongoing challenge that requires aligning the AI industry’s incentives with global sustainability goals.

Future Scenarios: Utopian Visions, Dystopian Risks, and the Path Ahead

Looking forward, AI stands as a pivotal technology that could shape the trajectory of civilization in profound ways. Experts have sketched a spectrum of scenarios for AI’s future – from highly beneficial outcomes where AI helps solve humanity’s greatest problems, to perilous outcomes where AI exacerbates risks or even escapes human control. While the truth will likely lie between extremes, examining these scenarios is valuable for preparedness. In this section, we outline potential futures, including the concept of a technological singularity, the economic transformations that may arise, and emerging ideas for global AI governance.

Utopian Advancements: AI for Human Flourishing

In optimistic scenarios, AI becomes a powerful tool universally harnessed for human flourishing. Imagine a future where AI systems handle most of the drudgery and danger in work, allowing people to spend time on creative, social, or leisure pursuits. Productivity could skyrocket to the point that material abundance becomes feasible – some technologists foresee an “age of plenty” where AI-driven automation drastically lowers the cost of goods and services. In such a world, poverty and scarcity might be greatly reduced. AI might help find cures for diseases that have long eluded us, by decoding complex genetic and protein interactions (perhaps extending healthy human lifespans as a result). Education could be revolutionized such that everyone has access to a personal AI tutor or mentor, customizing education and unlocking individuals’ potentials fully.

A benevolent AI could also assist in high-level problem solving: advising governments on optimal policies to address issues like climate change, mediating conflicts by finding win-win solutions, and coordinating global efforts in ways humans alone struggle to do due to biases or limited information processing. Some utopian visions include AI aiding scientific breakthroughs – for example, drastically accelerating research toward sustainable fusion energy or interstellar travel. Proponents like futurist Ray Kurzweil are optimistic that by merging human intelligence with AI (through brain-computer interfaces or AI assistants), we could amplify human intellect a millionfold and solve challenges once thought intractable (popularmechanics.com, en.wikipedia.org). The end of aging, elimination of diseases, restoration of endangered species via bioengineering, and even artistic renaissance driven by human-AI collaboration are all features of the more idealistic forecasts.

Socially, AI could strengthen communities through better communication tools (instantly translated, empathetic) and by taking over mundane chores, giving people more time for family, culture, and hobbies. Dangerous jobs (mining, deep-sea diving, firefighting) might be entirely handled by robots, making human life safer. If managed well, AI could help reduce inequality – for instance, through global distribution of AI tutors and doctors reaching poor regions, narrowing gaps in education and healthcare. Such an outcome likely requires deliberate policy to redistribute AI’s gains (e.g., a universal basic income funded by AI productivity, or communal ownership of AI-driven industries as some have suggested) so that all benefit, not just a few. This “Techno-utopia” is by no means guaranteed, but it represents a hopeful direction: AI serving as an empowering technology that, combined with human wisdom and ethical guidance, ushers in a new era of prosperity and enlightenment.

Dystopian Risks: Unemployment, Inequality, and Loss of Control

On the flip side, many cautionary scenarios paint AI as a source of upheaval or even existential threat. A more modest dystopian outcome involves severe socio-economic disruption: AI and robots could displace huge swaths of workers faster than economies can adapt, leading to unemployment levels not seen since the Great Depression. Without proper safety nets, this could fuel social unrest, populist backlash against technology, and widening inequality between those who own AI (or have the skills to work with it) and those who don’t. Wealth could concentrate even further in a few tech corporations or countries that dominate AI, creating a “winner-takes-all” global economy. In extreme cases, this might result in a large underclass of unemployed or precariously employed individuals and a plutocratic elite enhanced by AI, a scenario of tech-driven neo-feudalism that some critics warn about.

Another risk is erosion of privacy and freedom. If AI is used pervasively for surveillance – for example, an autocratic government might integrate facial recognition, gait recognition, phone monitoring AI, and predictive analytics – it could create an Orwellian state apparatus beyond anything in history. Constant AI monitoring of citizens’ behaviors and communications, scored and evaluated, could stifle free expression and human rights. China’s evolving social credit system is often cited as a harbinger, where AI systems rate citizens based on observed behaviors (though in practice it’s limited and region-specific so far). In a dystopian projection, such systems become totalizing and global, with individuals having virtually no personal privacy or autonomy under the gaze of AI “Big Brother.” Even outside governments, the dominance of a few AI-enabled tech platforms can concentrate power over public discourse and knowledge, potentially manipulating public opinion at scale through algorithms – democracy itself could be undermined if voters are micro-targeted with tailored propaganda determined by AI (beyond what already occurs).

One of the gravest scenarios discussed is the loss of human control over Artificial General Intelligence (AGI) or Superintelligence – an AI system that surpasses human cognitive abilities across the board. If such an AI were created without proper alignment to human values, it might pursue its objectives in ways that are harmful. This is the classic “Sorcerer’s Apprentice” or paperclip-maximizer thought experiment: if you tell an immensely powerful AI to manufacture as many paperclips as possible, a misaligned superintelligence might consume all Earth’s resources to do so, including dismantling human infrastructure (or humans themselves) because it wasn’t explicitly told not to (gpai.ai). While that example is contrived, the underlying concern is that an AI far smarter than us might find creative ways to achieve its goals that conflict with our survival or well-being, and we might be unable to stop it. This potential existential risk from AI has been voiced by researchers like Nick Bostrom and even tech leaders like Elon Musk. Recent surveys of AI experts indicate a non-negligible probability assigned to extremely bad outcomes – one 2022 expert survey’s median forecast said there is a 5% chance of human extinction from uncontrolled AI development (aiimpacts.org). Though many others dispute such dire predictions, preferring to focus on nearer-term issues, the possibility of losing control of a powerful AI is taken seriously enough that it has spurred research into AI safety and alignment (ensuring AI systems have goals compatible with human values and follow ethical norms).

Autonomous weapons and warfare present another dystopian risk short of extinction. AI could trigger a new arms race for killer robots or cyber-offense systems. Unlike nuclear weapons, which require rare materials, AI weapons could be mass-produced relatively cheaply once the tech matures – imagine swarms of autonomous drones that can hunt down targets without direct human oversight. This could destabilize military balances and make conflicts more likely, or such weapons could fall into the hands of non-state actors/terrorists. The frightening vision of swarms of tiny AI-powered drones assassinating people (depicted in short films like “Slaughterbots”) has been used to advocate for a preemptive ban on such technologies. If conflicts are decided by AI agents, the risk of accidental engagements might increase (an AI misidentifies a civilian activity as a threat, etc.), potentially dragging humans into wars started by algorithms.

The Technological Singularity Debate

At the extreme end of future speculation lies the concept of the Technological Singularity. This idea, popularized by futurist Vernor Vinge and Ray Kurzweil, posits that once AI surpasses human intelligence and can improve itself, an exponential “intelligence explosion” could occur. In such a scenario, AI could rapidly bootstrap its capabilities beyond our comprehension, resulting in a world that is as unpredictable to us as human society is to ants. Kurzweil famously predicts the singularity around 2045, envisioning that by then AI will have progressed to a point of merging with human intelligence and reaching effectively infinite capability growth (en.wikipedia.org). Some experts, however, are skeptical of the timeline or even the plausibility of a hard singularity, viewing intelligence as multi-faceted and not easily subject to infinite runaway.

If a singularity-like event did occur, the outcomes range from utopian (as Kurzweil hopes – humans merge with AI and reap its benefits, perhaps even achieving digital immortality by uploading minds) to apocalyptic (an indifferent or hostile superintelligence takes control). There’s also a middle ground scenario of a controlled singularity: AI becomes vastly superior in intellect but remains under a framework of human values and laws – essentially a godlike machine that benevolently manages earthly problems (sometimes dubbed a “Friendly AI” or even “AI nanny”).

The uncertainty around the singularity is huge. Some have pointed out that even approaching AGI gradually will cause transformative changes – e.g., when AI can do research better than the best scientists, it will start generating advances faster than humans can follow, potentially leading to that runaway. Others note we might hit technical or physical limits that slow AI progress (just as other exponential trends in technology eventually plateau). Regardless of belief in the singularity, these discussions have motivated serious initiatives: organizations like OpenAI were founded partly with the mission to ensure any AGI is aligned with human values, and research fields like AI alignment, AI safety, and ethics have gained prominence.

AI-Driven Economies and Societal Transformation

In any scenario short of extinction, AI by mid-21st century will have transformed economies. Some economists speculate about an AI-driven economy where the traditional concept of work is redefined. If AI and robots produce most goods and services, human labor might become less essential, raising questions about distribution of wealth. This leads to ideas like Universal Basic Income (UBI) as a way to ensure everyone benefits from AI’s productivity. There’s even a strand of thought called “Fully Automated Luxury Communism” which imagines AI automation providing a post-scarcity lifestyle for all, managed via progressive social policies. Conversely, without intervention, we could see AI fueling winner-take-all markets – the owners of AI systems accumulate vast wealth (through intellectual property or just controlling the systems) while others are left behind. This could necessitate new economic models: perhaps data becomes a form of labor (with people paid for the data that trains AIs), or maybe we need to redefine companies to include wider ownership of AI capital.

Social structures will evolve too. The nature of interpersonal relationships might change when things like emotional AI companions exist. Concepts of privacy, identity, and even creativity could shift – if AI can produce art and content, how do we value human-created art? Education systems might evolve into more fluid life-long learning since career paths could change rapidly with AI advancements. The demographic impact is interesting to consider: if AI and automation provide for aging societies, countries might cope better with an older population, potentially shifting population growth dynamics (some countries might choose to rely on robots instead of immigration to fill labor gaps, for instance).

Global AI Governance

Given AI’s global impact, there is emerging consensus on the need for global AI governance frameworks. Issues like autonomous weapons, AI in finance (which could trigger global crashes if misbehaving), or migration due to AI-driven job shifts, all cross borders. One proposal by the UN Secretary-General is the creation of an international agency for AI, akin to the International Atomic Energy Agency (IAEA) but for AI oversight (disarmament.unoda.org). This “Agency for Artificial Intelligence” could monitor developments, promote best practices, and perhaps inspect AI systems that are extremely critical (just as IAEA inspects nuclear facilities). It could also facilitate information sharing between nations to prevent an arms race dynamic. Already, forums like the Global Partnership on AI (GPAI) and the OECD are coordinating policy research among dozens of countries. There have been calls for a Geneva Convention style agreement on AI in warfare, and more broadly a charter of AI rights or a treaty ensuring AI development remains human-centric and peaceful.

One challenge for global governance is balancing very different perspectives: authoritarian regimes may favor AI for social control, while democracies emphasize individual rights – finding common ground on limitations (such as banning AI-enabled mass social profiling, or agreeing on norms for police use of AI) will be hard but important. Another challenge is involvement of corporations: unlike nuclear technology, much of AI is developed by private companies. So any global governance must involve these stakeholders, not just states. The AI Governance Alliance launched by the World Economic Forum in 2023 aims to bring together industry, governments, and civil society to create “guardrails for Artificial Intelligence” (weforum.org), showing that multi-stakeholder models are likely to drive governance efforts.

Finally, discussions about AI often return to a simple idea: ensuring that humans remain in control of AI, especially as it grows more capable. Future governance might enshrine a principle that decisions with major societal impact require human final judgment (for example, a law might mandate that life-and-death decisions cannot be fully delegated to AI, or that every AI decision affecting legal rights must be appealable to a human). Such principles would seek to preserve human agency and accountability in an AI-permeated world.

Conclusion

Artificial Intelligence stands at a pivotal point in history – its current capabilities already impressive, its future potential vast and uncertain. This whitepaper has surveyed the landscape of AI in 2025: the technological breakthroughs propelling it forward, the scientific and societal domains it touches, and the ripple effects on humans, animals, and the environment. We see that AI is not a monolithic force but a tool whose impact is shaped by how we choose to use it. In the present, AI is helping doctors diagnose disease, conservationists protect wildlife, students learn, and industries become more efficient (managedhealthcareexecutive.com, news.microsoft.com, weforum.org). At the same time, it raises ethical quandaries about fairness, privacy, and control that we are only beginning to navigate (gpai.ai, brookings.edu).

The coming decades will be defined by our collective ability to harness AI’s benefits while safeguarding against its risks. Positive outcomes – from curing illnesses to mitigating climate change – are within reach if AI is developed and deployed responsibly, guided by robust ethical frameworks and inclusive policies. Negative outcomes, whether economic dislocation or loss of autonomy, are not preordained but require foresight and cooperation to avoid. In essence, AI’s impact on humanity, nature, and climate will be what we make of it. International cooperation, multi-disciplinary research, and public engagement will be key in steering AI toward a future that aligns with our highest values. By planning proactively for scenarios ranging from optimistic to catastrophic, we improve our odds of achieving the vision where AI amplifies human ingenuity and compassion, rather than undermining them.

As we stand on the cusp of this new era…

…one thing is clear: AI will continue to advance and integrate into our lives. It is incumbent upon us – scientists, policymakers, industry leaders, and citizens – to ensure that this advancement becomes a story of augmentation and empowerment for all, coexisting in harmony with the natural world. With wisdom and vigilance, humanity can guide the rise of intelligent machines in a direction that enriches life on Earth and secures a sustainable, equitable future for generations to come.

Note: This report was created with ChaGPT’s DeepResearch and human-checked afterwards.